Erkang Zheng, JupiterOne | AWS re:Invent 2022 - Global Startup Program

well hello everybody John Wallace here on thecube he's continuing our segments here on the AWS Global startup showcase we are at day three of Reinventing irking Zhang is joining us now he is the CEO co-founder of Jupiter one um first off before we get going talking about you know security and big world for you guys I know what's your take on the show what's been going on out here at re invent yeah yeah ring event has been one of my favorite shows there's a lot of people here there's a lot of topics of course it's not just cyber security a lot of cloud infrastructure and just technology in general so you get a lot you know if you go walk the floor you see a lot of vendors you look at us go into sessions you can learn a lot but you're the Hot Topic right everybody's focused on Cyber yeah big time and with good reason right because as we know the Bad actors are getting even smarter and even faster and even more Nimble so just paint the landscape for me here in general right now as you see uh security Cloud Security in particular and and kind of where we are in that battle well we are clearly not winning so I think that in itself is a bit of a uh interesting problem right so as a it's not just Cloud security if you think about cyber security in general as an industry it has it has not been around for that long right but if you just look at the history of it uh we haven't done that while so uh pick another industry say medicine which has been around forever and if you look at the history of Medicine well I would argue you has done tremendously well because people live longer right when you get sick you get access to health care and yeah exactly you have Solutions and and you can see the trend even though there are problems in healthcare of course right but the trend is is good it's going well but not in cyber security more breaches more attacks more attackers we don't know what the hell we're doing with that many solutions and you know that's been one of my struggles as a former CSO and security practitioner for many years you know why is it that we're not getting better all right so I'm going to ask you the question yeah okay why aren't we getting better you know how come we can't stay ahead of the curve on this thing that for some reason it's like whack-a-mole times a hundred every time we think we solve one problem we have a hundred more that show up over here exactly and we have to address that and and our attention keeps floating around yeah I think you said it right so because we're taking this guacamole approach and we're looking for the painkiller of the day and you know we're looking for uh the Band-Aids right so and then we ended up well I I think to be fair to be fair to your industry the industry moves so quickly technology in general moves so quickly and security has been playing catch-up over time we're still playing catch-up so when you're playing catch-up you you can almost only uh look at you know what's the painkiller of what's the band name of the day so I can stop the bleeding right but I do think that we're we're to a point or we have enough painkillers and Band-Aids and and we need to start looking at how can we do better fundamentally with the basics and do the basics well because a lot of times the basics that get you into trouble so fundamentally the foundation I if I hear you right what you're saying is um you know quick changing industry right things are moving rapidly but we're not blocking and tackling we're not doing the X's and O's and so forget changing and we we got to get back to the basis and do those things right exactly you can only seem so simple it seems so simple but it's so hard right so you can you can think about you know uh even in case of building a starter building a company and and in order at one point right so we're blocking uh blocking tackling and then when we grow to a certain size we have to scale we have to figure out how to scale the business this is the same problem that happens in security as an industry we've been blocking happening for so long you know we're the industry is so young but we're to a point that we got to figure out how to scale this scale this in a fundamentally different way and I'll give you some example right so so what when we say the basics now it's easy to to think that say users should have MFA enabled is one of the basics right or another Basics will be you have endpoint protection on your devices you know maybe it's Cloud strike or Sentinel one or carbon black or whatever but the question being how do you know it is working 100 of the time right how do you know that how do you know right you find out too exactly that's right and how do you know that you have 100 coverage on your endpoints those Solutions are not going to tell you because they don't know what they don't know right if it's not enabled if it's not you know what what's the negative that you are not seeing so that's one of the things that you know that's in the basic state that you're now covering so the fundamentals it really goes to these five questions that I think that nobody has a really good answer for until now so the five questions goes what do I have right is it important what's important out of all the things I have you have a lot right you could have millions of things what important now for those that are important does it have a problem and if it has a problem who can fix it because the reality is in most cases security teams are not the ones fixing the problems they're they're the ones identical they're very good at recognizing but not so good exactly identifying the owner who can fix it right right could be could be business owner could be Engineers so the the asset ownership identification right so so these four questions and and then over time you know whether it's over a week or a month or a quarter or a year am I getting better right and then you just keep asking these questions in different areas in different domains with a different lens right so maybe that's endpoints maybe that's Cloud maybe that's you know users maybe that's a product and applications right but it really boils down to these five questions that's the foundation for any good security program if you can do that well I think we cover a lot of bases and we're going to be in much better shape than we have been all right so where do you come in man Jupiter one in terms of what you're providing because obviously you've identified this kind of pyramid yes this hierarchy of addressing needs and I assume obviously knowing you as I do and knowing the company as I do you've got Solutions that's exactly right right and and we precisely answer those five questions right for uh any organization uh from a asset perspective right because all the the answers to all those these five questions are based in assets it starts with knowing what I have right right so the the overall challenge of cyber security being broke broken I I believe is fundamentally that people do not understand and cannot uh probably deal with the complexity that we have within our own environments so again like you know using uh medicine as an example right so in order to come up with the right medicine for either it's a vaccine for covid-19 or whether it is a treatment for cancer or whatever that case may be you have to start with the foundations of understanding both the pathogen and to the human body like DNA sequencing right without those you cannot effectively produce the right medicine in modern uh you know Medicine sure right so that is the same thing that's happening in cyber security you know we spend a lot of times you know putting band days in patches right and then we spend a lot of time doing attacker research from the outside but we don't fundamentally understand in a complete way what's the complexity within our own environment in terms of digital assets and that's that's almost like the DNA of your own work what is that kind of mind-blowing in a way that if again hearing you what you're talking about is saying that the first step is to identify what you have that's right so it seems just so basic that that I should know what I what's under my hood I should know what is valuable and what is not I should prioritize what I really need to protect and what maybe can go on the second shelf yeah it has been a tough problem since the beginning of I.T not just the beginning of cyber security right so in the history of I.T we have this thing called cmdb configuration management database it is supposed to capture the configurations of it assets now over time that has become a lot more complex and and there's a lot more than just it asset that we have to understand from a security and attack service perspective right so we have to understand I.T environments we have to understand Cloud environments and applications and users and access and data and as and all of those things then then we have to take a different approach of sort of a modern cmdb right so what is the way that we can understand all of those complexity within all of those assets but not just independently within those silos but rather in a connected way so we can not only understand the attack surface but only but also understand the attack path that connect the dots from one thing to another right because everything in the organization is actually connected if if there's any one thing that sits on an island right so if you say you have a a a a server or a device or a user that is on an island that is not connected to the rest of the organization then why have it right and it doesn't matter so it's the understanding of that connect connected tissue this entire map where this you know DNA sequencing equivalent of a digital organization is what Jupiter one provides right so that visibility of the fundamental you know very granular uh level of assets and resources to answer those five questions and how does that how do I get better at that then I mean I have you to help me but but internally within our organization um I mean I don't want to be rude but I mean do I have do I have the skill for that do I have um do I have the the internal horsepower for that or or is there some need to close that Gap and how do I do it you know I'll tell you two things right so so one you mentioned the worst skills right so let me start there so because this one is very interesting we also have a huge skills shortage in cyber security we will we've all heard that for years and and and and for a long time but if you dig deeper into it why is that why is that and you know we have a lot of you know talented people right so why do we still have a skills shortage now what's interesting is if you think about what we're asking security people to do is mind-boggling so if you if you get a security analyst to say hey I want to understand how to protect something or or how to deal with an incident and what you're asking the person to do is not only to understand the security concept and be a domain expert in security you're also asking the person to and understand at the same time AWS or other clouds or endpoints or code or applications so that you can properly do the analysis and the in the response it's it's impossible it's like you know if you have you have to have a person who's an expert in everything know everything about everything that's right it's impossible so so so that's that's one thing that we have to to resolve is how do we use technology like Jupiter one to provide an abstraction so that there's Automation in place to help the security teams be better at their jobs without having to be an expert in deep technology right just add the abstract level of understanding because you know we can we can model the data and and provide the analysis and visual visualization out of the box for them so they can focus on just the security practices so that's one and the second thing is we have to change the mindset like take vulnerability management as an example right so the mindset for vulnerability management has been how do I manage findings now we have to change it to the concept of more proactive and how to manage assets so let's think about uh you know say log4j right that that happened and uh you know when it happened everybody scrambles and said hey which which devices or which you know uh systems have log4j and you know it doesn't matter what's the impact we can fix it right going back to those questions that that I mentioned before right and then um and then they try to look for a solution at a time say well where's that silver bullet that can give me the answers now what what what we struggle with though is that you know I want to maybe ask the question where were you six months ago where were you six months ago where you could have done the due diligence and put something in place that help you understand all of these assets and connections so you can go to one place and just ask for that question when something like that you know hit the fan so so if we do not fundamentally change the mindset to say I have to look at things not from a reactive findings perspective but really starting from an asset-centric you know day one perspective to look at that and have this Foundation have this map build we can't get there right so it's like you know if I need direction I go to Google Maps right but the the reason that it works is because somebody has done the work of creating the map right right if you haven't if you don't have the map and you just at you know when the time you say I gotta go somewhere and you expect the map to magically happen to show you the direction it's not going to work right right I imagine there are a lot of people out there right now are listening to thinking oh boy you know and that's what Jupiter one's all about they're there to answer your oh boy thanks for the time of course I appreciate the insights as well it's nice to know that uh at least somebody is reminding us to keep the front door locked too that's just the back door the side doors keep that front door and that garage locked up too definitely um all right we'll continue our coverage here at AWS re invent 22 this is part of the AWS Global startup showcase and you're watching the cube the leader in high-tech coverage foreign

SUMMARY :

all right so I'm going to ask you the

SENTIMENT ANALYSIS :

ENTITIES

| Entity | Category | Confidence |

|---|---|---|

| five questions | QUANTITY | 0.99+ |

| John Wallace | PERSON | 0.99+ |

| five questions | QUANTITY | 0.99+ |

| AWS | ORGANIZATION | 0.99+ |

| six months ago | DATE | 0.99+ |

| four questions | QUANTITY | 0.99+ |

| first step | QUANTITY | 0.99+ |

| two things | QUANTITY | 0.99+ |

| over a week | QUANTITY | 0.99+ |

| Google Maps | TITLE | 0.98+ |

| Erkang Zheng | PERSON | 0.98+ |

| second thing | QUANTITY | 0.98+ |

| one | QUANTITY | 0.98+ |

| covid-19 | OTHER | 0.98+ |

| one place | QUANTITY | 0.98+ |

| Zhang | PERSON | 0.98+ |

| both | QUANTITY | 0.97+ |

| one problem | QUANTITY | 0.97+ |

| one thing | QUANTITY | 0.97+ |

| Jupiter | LOCATION | 0.96+ |

| second shelf | QUANTITY | 0.95+ |

| millions of things | QUANTITY | 0.93+ |

| a quarter | QUANTITY | 0.92+ |

| one point | QUANTITY | 0.9+ |

| 100 coverage | QUANTITY | 0.86+ |

| Band-Aids | ORGANIZATION | 0.85+ |

| Global startup showcase | EVENT | 0.85+ |

| a year | QUANTITY | 0.85+ |

| a lot of people | QUANTITY | 0.85+ |

| day three | QUANTITY | 0.84+ |

| years | QUANTITY | 0.82+ |

| a lot of people | QUANTITY | 0.82+ |

| first | QUANTITY | 0.81+ |

| re invent | EVENT | 0.8+ |

| a month | QUANTITY | 0.8+ |

| a hundred more | QUANTITY | 0.79+ |

| one of the things | QUANTITY | 0.77+ |

| favorite | QUANTITY | 0.76+ |

| my struggles | QUANTITY | 0.76+ |

| cmdb | TITLE | 0.74+ |

| 100 of | QUANTITY | 0.74+ |

| a hundred | QUANTITY | 0.73+ |

| lot of times | QUANTITY | 0.72+ |

| one of | QUANTITY | 0.71+ |

| lot of bases | QUANTITY | 0.71+ |

| time | QUANTITY | 0.71+ |

| one thing | QUANTITY | 0.69+ |

| re:Invent 2022 - Global Startup Program | TITLE | 0.67+ |

| ring | EVENT | 0.64+ |

| lot of | QUANTITY | 0.64+ |

| Reinventing irking | EVENT | 0.62+ |

| day | QUANTITY | 0.61+ |

| lot | QUANTITY | 0.61+ |

| re invent 22 | EVENT | 0.58+ |

| lot of times | QUANTITY | 0.57+ |

| strike | OTHER | 0.49+ |

| every time | QUANTITY | 0.43+ |

| Jupiter | TITLE | 0.43+ |

| JupiterOne | ORGANIZATION | 0.31+ |

Lucas Snyder, Indiana University and Karl Oversteyns, Purdue University | SuperComputing 22

(upbeat music) >> Hello, beautiful humans and welcome back to Supercomputing. We're here in Dallas, Texas giving you live coverage with theCUBE. I'm joined by David Nicholson. Thank you for being my left arm today. >> Thank you Savannah. >> It's a nice little moral. Very excited about this segment. We've talked a lot about how the fusion between academia and the private sector is a big theme at this show. You can see multiple universities all over the show floor as well as many of the biggest companies on earth. We were very curious to learn a little bit more about this from people actually in the trenches. And we are lucky to be joined today by two Purdue students. We have Lucas and Karl. Thank you both so much for being here. >> One Purdue, one IU, I think. >> Savannah: Oh. >> Yeah, yeah, yeah. >> I'm sorry. Well then wait, let's give Indiana University their fair do. That's where Lucas is. And Karl is at Purdue. Sorry folks. I apparently need to go back to school to learn how to read. (chuckles) In the meantime, I know you're in the middle of a competition. Thank you so much for taking the time out. Karl, why don't you tell us what's going on? What is this competition? What brought you all here? And then let's dive into some deeper stuff. >> Yeah, this competition. So we're a joint team between Purdue and IU. We've overcome our rivalries, age old rivalries to computer at the competition. It's a multi-part competition where we're going head to head against other teams from all across the world, benchmarking our super computing cluster that we designed. >> Was there a moment of rift at all when you came together? Or was everyone peaceful? >> We came together actually pretty nicely. Our two advisors they were very encouraging and so we overcame that, no hostility basically. >> I love that. So what are you working on and how long have you guys been collaborating on it? You can go ahead and start Lucas. >> So we've been prepping for this since the summer and some of us even before that. >> Savannah: Wow. >> And so currently we're working on the application phase of the competition. So everybody has different specialties and basically the competition gives you a set of rules and you have to accomplish what they tell you to do in the allotted timeframe and run things very quickly. >> And so we saw, when we came and first met you, we saw that there are lights and sirens and a monitor looking at the power consumption involved. So part of this is how much power is being consumed. >> Karl: That's right. >> Explain exactly what are the what are the rules that you have to live within? >> So, yeah, so the main constraint is the time as we mentioned and the power consumption. So for the benchmarking phase, which was one, two days ago there was a hard camp of 3000 watts to be consumed. You can't go over that otherwise you would be penalized for that. You have to rerun, start from scratch basically. Now there's a dynamic one for the application section where it's it modulates at random times. So we don't know when it's going to go down when it's going to go back up. So we have to adapt to that in real time. >> David: Oh, interesting. >> Dealing with a little bit of real world complexity I guess probably is simulation is here. I think that's pretty fascinating. I want to know, because I am going to just confess when I was your age last week, I did not understand the power of supercomputing and high performance computing. Lucas, let's start with you. How did you know this was the path you wanted to go down in your academic career? >> David: Yeah, what's your background? >> Yeah, give us some. >> So my background is intelligence systems engineering which is kind of a fusion. It's between, I'm doing bioengineering and then also more classical computer engineering. So my background is biology actually. But I decided to go down this path kind of on a whim. My professor suggested it and I've kind of fallen in love with it. I did my summer internship doing HPC and I haven't looked back. >> When did you think you wanted to go into this field? I mean, in high school, did you have a special teacher that sparked it? What was it? >> Lucas: That's funny that you say that. >> What was in your background? >> Yes, I mean, in high school towards the end I just knew that, I saw this program at IU and it's pretty new and I just thought this would be a great opportunity for me and I'm loving it so far. >> Do you have family in tech or is this a different path for you? >> Yeah, this is a different path for me, but my family is so encouraging and they're very happy for me. They text me all the time. So I couldn't be happier. >> Savannah: Just felt that in my heart. >> I know. I was going to say for the parents out there get the tissue out. >> Yeah, yeah, yeah. (chuckles) >> These guys they don't understand. But, so Karl, what's your story? What's your background? >> My background, I'm a major in unmanned Aerial systems. So this is a drones commercial applications not immediately connected as you might imagine although there's actually more overlap than one might think. So a lot of unmanned systems today a lot of it's remote sensing, which means that there's a lot of image processing that takes place. Mapping of a field, what have you, or some sort of object, like a silo. So a lot of it actually leverages high performance computing in order to map, to visualize much replacing, either manual mapping that used to be done by humans in the field or helicopters. So a lot of cost reduction there and efficiency increases. >> And when did you get this spark that said I want to go to Purdue? You mentioned off camera that you're from Belgium. >> Karl: That's right. >> Did you, did you come from Belgium to Purdue or you were already in the States? >> No, so I have family that lives in the States but I grew up in Belgium. >> David: Okay. >> I knew I wanted to study in the States. >> But at what age did you think that science and technology was something you'd be interested in? >> Well, I've always loved computers from a young age. I've been breaking computers since before I can remember. (chuckles) Much to my parents dismay. But yeah, so I've always had a knack for technology and that's sort of has always been a hobby of mine. >> And then I want to ask you this question and then Lucas and then Savannah will get some time. >> Savannah: It cool, will just sit here and look pretty. >> Dream job. >> Karl: Dream job. >> Okay. So your undergrad both you. >> Savannah: Offering one of my questions. Kind of, It's adjacent though. >> Okay. You're undergrad now? Is there grad school in your future do you feel that's necessary? Is that something you want to pursue? >> I think so. Entrepreneurship is something that's been in the back of my head for a while as well. So may be or something. >> So when I say dream job, understand could be for yourself. >> Savannah: So just piggyback. >> Dream thing after academia or stay in academia. What's do you think at this point? >> That's a tough question. You're asking. >> You'll be able to review this video in 10 years. >> Oh boy. >> This is give us your five year plan and then we'll have you back on theCUBE and see 2027. >> What's the dream? There's people out here watching this. I'm like, go, hey, interesting. >> So as I mentioned entrepreneurship I'm thinking I'll start a company at some point. >> David: Okay. >> Yeah. In what? I don't know yet. We'll see. >> David: Lucas, any thoughts? >> So after graduation, I am planning to go to grad school. IU has a great accelerated master's degree program so I'll stay an extra year and get my master's. Dream job is, boy, that's impossible to answer but I remember telling my dad earlier this year that I was so interested in what NASA was doing. They're sending a probe to one of the moons of Jupiter. >> That's awesome. From a parent's perspective the dream often is let's get the kids off the payroll. So I'm sure that your families are happy to hear that you have. >> I think these two will be right in that department. >> I think they're going to be okay. >> Yeah, I love that. I was curious, I want to piggyback on that because I think when NASA's doing amazing we have them on the show. Who doesn't love space. >> Yeah. >> I'm also an entrepreneur though so I very much empathize with that. I was going to ask to your dream job, but also what companies here do you find the most impressive? I'll rephrase. Because I was going to say, who would you want to work with? >> David: Anything you think is interesting? >> But yeah. Have you even had a chance to walk the floor? I know you've been busy competing >> Karl: Very little. >> Yeah, I was going to say very little. Unfortunately I haven't been able to roam around very much. But I look around and I see names that I'm like I can't even, it's crazy to see them. Like, these are people who are so impressive in the space. These are people who are extremely smart. I'm surrounded by geniuses everywhere I look, I feel like, so. >> Savannah: That that includes us. >> Yeah. >> He wasn't talking about us. Yeah. (laughs) >> I mean it's hard to say any of these companies I would feel very very lucky to be a part of, I think. >> Well there's a reason why both of you were invited to the party, so keep that in mind. Yeah. But so not a lot of time because of. >> Yeah. Tomorrow's our day. >> Here to get work. >> Oh yes. Tomorrow gets play and go talk to everybody. >> Yes. >> And let them recruit you because I'm sure that's what a lot of these companies are going to be doing. >> Yeah. Hopefully it's plan. >> Have you had a second at all to look around Karl. >> A Little bit more I've been going to the bathroom once in a while. (laughs) >> That's allowed I mean, I can imagine that's a vital part of the journey. >> I've ruin my gaze a little bit to what's around all kinds of stuff. Higher education seems to be very important in terms of their presence here. I find that very, very impressive. Purdue has a big stand IU as well, but also others all from Europe as well and Asia. I think higher education has a lot of potential in this field. >> David: Absolutely. >> And it really is that union between academia and the private sector. We've seen a lot of it. But also one of the things that's cool about HPC is it's really not ageist. It hasn't been around for that long. So, I mean, well, at this scale it's obviously this show's been going on since 1988 before you guys were even probably a thought. But I think it's interesting. It's so fun to get to meet you both. Thank you for sharing about what you're doing and what your dreams are. Lucas and Karl. >> David: Thanks for taking the time. >> I hope you win and we're going to get you off the show here as quickly as possible so you can get back to your teams and back to competing. David, great questions as always, thanks for being here. And thank you all for tuning in to theCUBE Live from Dallas, Texas, where we are at Supercomputing. My name's Savannah Peterson and I hope you're having a beautiful day. (gentle upbeat music)

SUMMARY :

Thank you for being my left arm today. Thank you both so much for being here. I apparently need to go back from all across the world, and so we overcame that, So what are you working on since the summer and some and you have to accomplish and a monitor looking at the So for the benchmarking phase, How did you know this was the path But I decided to go down I saw this program at They text me all the time. I was going to say for Yeah, yeah, yeah. But, so Karl, what's your story? So a lot of unmanned systems today And when did you get that lives in the States I can remember. ask you this question Savannah: It cool, will of my questions. Is that something you want to pursue? I think so. So when I say dream job, understand What's do you think at this point? That's a tough question. You'll be able to review and then we'll have you back What's the dream? So as I mentioned entrepreneurship I don't know yet. planning to go to grad school. to hear that you have. I think these two will I was curious, I want to piggyback on that I was going to ask to your dream job, Have you even had I can't even, it's crazy to see them. Yeah. I mean it's hard to why both of you were invited go talk to everybody. And let them recruit you Have you had a second I've been going to the I mean, I can imagine that's I find that very, very impressive. It's so fun to get to meet you both. going to get you off the show

SENTIMENT ANALYSIS :

ENTITIES

| Entity | Category | Confidence |

|---|---|---|

| Savannah | PERSON | 0.99+ |

| David | PERSON | 0.99+ |

| David Nicholson | PERSON | 0.99+ |

| Belgium | LOCATION | 0.99+ |

| Karl | PERSON | 0.99+ |

| NASA | ORGANIZATION | 0.99+ |

| 3000 watts | QUANTITY | 0.99+ |

| Lucas | PERSON | 0.99+ |

| IU | ORGANIZATION | 0.99+ |

| Europe | LOCATION | 0.99+ |

| Karl Oversteyns | PERSON | 0.99+ |

| Savannah Peterson | PERSON | 0.99+ |

| five year | QUANTITY | 0.99+ |

| Asia | LOCATION | 0.99+ |

| Lucas Snyder | PERSON | 0.99+ |

| Dallas, Texas | LOCATION | 0.99+ |

| Purdue | ORGANIZATION | 0.99+ |

| two advisors | QUANTITY | 0.99+ |

| Tomorrow | DATE | 0.99+ |

| two | QUANTITY | 0.99+ |

| Purdue | LOCATION | 0.99+ |

| 1988 | DATE | 0.99+ |

| last week | DATE | 0.99+ |

| Jupiter | LOCATION | 0.99+ |

| both | QUANTITY | 0.99+ |

| Purdue University | ORGANIZATION | 0.99+ |

| 10 years | QUANTITY | 0.99+ |

| One | QUANTITY | 0.99+ |

| today | DATE | 0.99+ |

| two days ago | DATE | 0.98+ |

| one | QUANTITY | 0.98+ |

| Indiana University | ORGANIZATION | 0.98+ |

| Indiana University | ORGANIZATION | 0.97+ |

| earlier this year | DATE | 0.93+ |

| earth | LOCATION | 0.93+ |

| first | QUANTITY | 0.92+ |

| Supercomputing | ORGANIZATION | 0.9+ |

| 2027 | TITLE | 0.86+ |

| HPC | ORGANIZATION | 0.8+ |

| theCUBE | ORGANIZATION | 0.8+ |

| States | LOCATION | 0.56+ |

| second | QUANTITY | 0.48+ |

| 22 | QUANTITY | 0.38+ |

Harry Glaser, Modlbit, Damon Bryan, Hyperfinity & Stefan Williams, Snowflake | Snowflake Summit 2022

>>Thanks. Hey, everyone, welcome back to the cubes. Continuing coverage of snowflakes. Summit 22 live from Caesars Forum in Las Vegas. Lisa Martin here. I have three guests here with me. We're gonna be talking about Snowflake Ventures and the snowflakes start up Challenge. That's in its second year. I've got Harry Glaser with me. Co founder and CEO of Model Bit Start Up Challenge finalist Damon Bryan joins us as well. The CTO and co founder of Hyper Affinity. Also a startup Challenge Finalists. And Stephane Williams to my left here, VP of Corporate development and snowflake Ventures. Guys, great to have you all on this little mini panel this morning. >>Thank you. >>Thank you. >>Let's go ahead, Harry, and we'll start with you. Talk to the audience about model. But what do you guys do? And then we'll kind of unpack the snowflake. The Snowflakes challenge >>Model bit is the easiest way for data scientists to deploy machine learning models directly into Snowflake. We make use of the latest snowflake functionality called Snow Park for python that allows those models to run adjacent to the data so that machine learning models can be much more efficient and much more powerful than they were before. >>Awesome. Damon. Give us an overview of hyper affinity. >>Yes, so hyper affinity were Decision Intelligence platform. So we helped. Specifically retailers and brands make intelligent decisions through the use of their own customer, data their product data and put data science in a I into the heart of the decision makers across their business. >>Nice Step seven. Tell us about the startup challenge. We talked a little bit about it yesterday with CMO Denise Pearson, but I know it's in its second year. Give us the idea of the impetus for it, what it's all about and what these companies embody. >>Yeah, so we This is the second year that we've done it. Um, we it was really out of, um Well, it starts with snowflake Ventures when we started to invest in companies, and we quickly realised that there's there's a massive opportunity for companies to be building on top of the Lego blocks, uh, of snowflake. And so, um, open up the competition. Last year it was the inaugural competition overlay analytics one, Um, and since then, you've seen a number of different functionalities and features as part of snowflakes snow part. Being one of them native applications is a really exciting one going forward. Um, the companies can really use to accelerate their ability to kind of deliver best in class applications using best in class technology to deliver real customer outcomes and value. Um, so we've we've seen tremendous traction across the globe, 250 applicants across 50. I think 70 countries was mentioned today, so truly global in nature. And it's really exciting to see how some of the start ups are taking snowflake to to to new and interesting use cases and new personas and new industries. >>So you had 200 over 250 software companies applied for this. How did you did you narrow it down to three? >>We did. Yeah, >>you do that. >>So, behind the scenes, we had a sub judging panel, the ones you didn't see up on stage, which I was luckily part of. We had kind of very distinct evaluation criteria that we were evaluating every company across. Um and we kind of took in tranches, right? We we took the first big garden, and we kind of try to get that down to a top 50 and top 50. Then we really went into the details and we kind of across, um, myself in ventures with some of my venture partners. Um, some of the market teams, some of the product and engineering team, all kind of came together and evaluated all of these different companies to get to the top 10, which was our semifinalists and then the semi finalists, or had a chance to present in front of the group. So we get. We got to meet over Zoom along the way where they did a pitch, a five minute pitch followed by a Q and A in a similar former, I guess, to what we just went through the startup challenge live, um, to get to the top three. And then here we are today, just coming out of the competition with with With folks here on the table. >>Wow, Harry talked to us about How did you just still down what model bit is doing into five minutes over Zoom and then five minutes this morning in person? >>I think it was really fun to have that pressure test where, you know, we've only been doing this for a short time. In fact model. It's only been a company for four or five months now, and to have this process where we pitch and pitch again and pitch again and pitch again really helped us nail the one sentence value proposition, which we hadn't done previously. So in that way, very grateful to step on in the team for giving us that opportunity. >>That helps tremendously. I can imagine being a 4 to 5 months young start up and really trying to figure out I've worked with those young start ups before. Messaging is challenging the narrative. Who are we? What do we do? How are we changing or chasing the market? What are our customers saying we are? That's challenging. So this was a good opportunity for you, Damon. Would you say the same as well for hyper affinity? >>Yeah, definitely conquer. It's really helped us to shape our our value proposition early and how we speak about that. It's quite complicated stuff, data science when you're trying to get across what you do, especially in retail, that we work in. So part of what our platform does is to help them make sense of data science and Ai and implement that into commercial decisions. So you have to be really kind of snappy with how you position things. And it's really helped us to do that. We're a little bit further down the line than than these guys we've been going for three years. So we've had the benefit of working with a lot of retailers to this point to actually identify what their problems are and shape our product and our proposition towards. >>Are you primarily working with the retail industry? >>Yes, Retail and CPG? Our primary use case. We have seen any kind of consumer related industries. >>Got it. Massive changes right in retail and CPG the last couple of years, the rise of consumer expectations. It's not going to go back down, right? We're impatient. We want brands to know who we are. I want you to deliver relevant content to me that if I if I bought a tent, go back on your website, don't show me more tense. Show me things that go with that. We have this expectation. You >>just explain the whole business. But >>it's so challenging because the brothers brands have to respond to that. How do you what is the value for retailers working with hyper affinity and snowflake together. What's that powerhouse? >>Yeah, exactly. So you're exactly right. The retail landscape is changing massively. There's inflation everywhere. The pandemic really impacted what consumers really value out of shopping with retailers. And those decisions are even harder for retailers to make. So that's kind of what our platform does. It helps them to make those decisions quickly, get the power of data science or democratise it into the hands of those decision makers. Um, so our platform helps to do that. And Snowflake really underpins that. You know, the scalability of snowflake means that we can scale the data and the capability that platform in tangent with that and snowflake have been innovating a lot of things like Snow Park and then the new announcements, announcements, uni store and a native APP framework really helping us to make developments to our product as quick as snowflakes are doing it. So it's really beneficial. >>You get kind of that tailwind from snowflakes acceleration. It sounds like >>exactly that. Yeah. So as soon as we hear about new things were like, Can we use it? You know, and Snow Park in particular was music to our ears, and we actually part of private preview for that. So we've been using that while and again some of the new developments will be. I'm on the phone to my guys saying, Can we use this? Get it, get it implemented pretty quickly. So yeah, >>fantastic. Sounds like a great aligned partnership there, Harry. Talk to us a little bit about model bit and how it's enabling customers. Maybe you've got a favourite customer example at model bit plus snowflake, the power that delivers to the end user customer? >>Absolutely. I mean, as I said, it allows you to deploy the M L model directly into snowflake. But sometimes you need to use the exact same machine learning model in multiple endpoints simultaneously. For example, one of our customers uses model bit to train and deploy a lead scoring model. So you know when somebody comes into your website and they fill out the form like they want to talk to a sales person, is this gonna be a really good customer? Do we think or maybe not so great? Maybe they won't pay quite as much, and that lead scoring model actually runs on the website using model bit so that you can deploy display a custom experience to that customer we know right away. If this is an A, B, C or D lead, and therefore do we show them a salesperson contact form? Do we just put them in the marketing funnel? Based on that lead score simultaneously, the business needs to know in the back office the score of the lead so that they can do things like routed to the appropriate salesperson or update their sales forecasts for the end of the quarter. That same model also runs in the in the snowflake warehouse so that those back office systems can be powered directly off of snowflake. The fact that they're able to train and deploy one model into two production environment simultaneously and manage all that is something they can only do with bottled it. >>Lead scoring has been traditionally challenging for businesses in every industry, but it's so incredibly important, especially as consumers get pickier and pickier with. I don't want I don't want to be measured. I want to opt out. What sounds like what model but is enabling is especially alignment between sales and marketing within companies, which is That's also a big challenge at many companies face for >>us. It starts with the data scientist, right? The fact that sales and marketing may not be aligned might be an issue with the source of truth. And do we have a source of truth at this company? And so the idea that we can empower these data scientists who are creating this value in the company by giving them best in class tools and resources That's our dream. That's our mission. >>Talk to me a little bit, Harry. You said you're only 4 to 5 months old. What were the gaps in the market that you and your co founders saw and said, Guys, we've got to solve this. And Snowflake is the right partner to help us do it. >>Absolutely. We This is actually our second start up, and we started previously a data Analytics company that was somewhat successful, and it got caught up in this big wave of migration of cloud tools. So all of data tools moved and are moving from on premise tools to cloud based tools. This is really a migration. That snowflake catalyst Snowflake, of course, is the ultimate in cloud based data platforms, moving customers from on premise data warehouses to modern cloud based data clouds that dragged and pulled the rest of the industry along with it. Data Science is one of the last pieces of the data industry that really hasn't moved to the cloud yet. We were almost surprised when we got done with our last start up. We were thinking about what to do next. The data scientists were still using Jupiter notebooks locally on their laptops, and we thought, This is a big market opportunity and we're We're almost surprised it hasn't been captured yet, and we're going to get in there. >>The other thing. I think it's really interesting on your business that we haven't talked about is just the the flow of data, right? So that the data scientist is usually taking data out of a of a of a day like something like Smoke like a data platform and the security kind of breaks down because then it's one. It's two, it's three, it's five, it's 20. Its, you know, big companies just gets really big. And so I think the really interesting thing with what you guys are doing is enabling the data to stay where it's at, not copping out keeping that security, that that highly governed environment that big companies want but allowing the data science community to really unlock that value from the data, which is really, really >>cool. Wonderful for small startups like Model Bit. Because you talk to a big company, you want them to become a customer. You want them to use your data science technology. They want to see your fed ramp certification. They want to talk to your C. So we're two guys in Silicon Valley with a dream. But if we can tell them the data is staying in snowflake and you have that conversation with Snowflake all the time and you trust them were just built on top. That is an easy and very smooth way to have that conversation with the customer. >>Would you both say that there's credibility like you got street cred, especially being so so early in this stage? Harry, with the partnership with With Snowflake Damon, we'll start with you. >>Yeah, absolutely. We've been using Snowflake from day one. We leave from when we started our company, and it was a little bit of an unknown, I guess maybe 23 years ago, especially in retail. A lot of retailers using all the legacy kind of enterprise software, are really starting to adopt the cloud now with what they're doing and obviously snowflake really innovating in that area. So what we're finding is we use Snowflake to host our platform and our infrastructure. We're finding a lot of retailers doing that as well, which makes it great for when they wanted to use products like ours because of the whole data share thing. It just becomes really easy. And it really simplifies it'll and data transformation and data sharing. >>Stephane, talk about the startup challenge, the innovation that you guys have seen, and only the second year I can. I can just hear it from the two of you. And I know that the winner is back in India, but tremendous amount of of potential, like to me the last 2.5 days, the flywheel that is snowflake is getting faster and faster and more and more powerful. What are some of the things that excite you about working on the start up challenge and some of the vision going forward that it's driving. >>I think the incredible thing about Snowflake is that we really focus as a company on the data infrastructure and and we're hyper focused on enabling and incubating and encouraging partners to kind of stand on top of a best of breed platform, um, unlocked value across the different, either personas within I T organisations or industries like hypothermia is doing. And so it's it's it's really incredible to see kind of domain knowledge and subject matter expertise, able to kind of plug into best of breed underlying data infrastructure and really divide, drive, drive real meaningful outcomes for for for our customers in the community. Um, it's just been incredible to see. I mean, we just saw three today. Um, there was 250 incredible applications that past the initial. Like, do they check all the boxes and then actually, wow, they just take you to these completely different areas. You never thought that the technology would go and solve. And yet here we are talking about, you know, really interesting use cases that have partners are taking us to two >>150. Did that surprise you? And what was it last year. >>I think it was actually close to close to 2 to 40 to 50 as well, and I think it was above to 50 this year. I think that's the number that is in my head from last year, but I think it's actually above that. But the momentum is, Yeah, it's there and and again, we're gonna be back next year with the full competition, too. So >>awesome. Harry, what is what are some of the things that are next for model bed as it progresses through its early stages? >>You know, one thing I've learned and I think probably everyone at this table has internalised this lesson. Product market fit really is everything for a start up. And so for us, it's We're fortunate to have a set of early design partners who will become our customers, who we work with every day to build features, get their feedback, make sure they love the product, and the most exciting thing that happened to me here this week was one of our early design partner. Customers wanted us to completely rethink how we integrate with gets so that they can use their CI CD workflows their continuous integration that they have in their own get platform, which is advanced. They've built it over many years, and so can they back, all of model, but with their get. And it was it was one of those conversations. I know this is getting a little bit in the weeds, but it was one of those conversations that, as a founder, makes your head explode. If we can have a critical mass of those conversations and get to that product market fit, then the flywheel starts. Then the investment money comes. Then you're hiring a big team and you're off to the races. >>Awesome. Sounds like there's a lot of potential and momentum there. Damon. Last question for you is what's next for hyper affinity. Obviously you've got we talked about the street cred. >>Yeah, what's >>next for the business? >>Well, so yeah, we we've got a lot of exciting times coming up, so we're about to really fully launch our products. So we've been trading for three years with consultancy in retail analytics and data science and actually using our product before it was fully ready to launch. So we have the kind of main launch of our product and we actually starting to onboard some clients now as we speak. Um, I think the climate with regards to trying to find data, science, resources, you know, a problem across the globe. So it really helps companies like ours that allow, you know, allow retailers or whoever is to democratise the use of data science. And perhaps, you know, really help them in this current climate where they're struggling to get world class resource to enable them to do that >>right so critical stuff and take us home with your overall summary of snowflake summit. Fourth annual, nearly 10,000 people here. Huge increase from the last time we were all in person. What's your bumper sticker takeaway from Summit 22 the Startup Challenge? >>Uh, that's a big closing statement for me. It's been just the energy. It's been incredible energy, incredible excitement. I feel the the products that have been unveiled just unlock a tonne, more value and a tonne, more interesting things for companies like the model bit I profanity and all the other startups here. And to go and think about so there's there's just this incredible energy, incredible excitement, both internally, our product and engineering teams, the partners that we have spoke. I've spoken here with the event, the portfolio companies that we've invested in. And so there's there's there's just this. Yeah, incredible momentum and excitement around what we're able to do with data in today's world, powered by underlying platform, like snowflakes. >>Right? And we've heard that energy, I think, through l 30 plus guests we've had on the show since Tuesday and certainly from the two of you as well. Congratulations on being finalist. We wish you the best of luck. You have to come back next year and talk about some of the great things. More great >>things hopefully will be exhibited next year. >>Yeah, that's a good thing to look for. Guys really appreciate your time and your insights. Congratulations on another successful start up challenge. >>Thank you so much >>for Harry, Damon and Stefan. I'm Lisa Martin. You're watching the cubes. Continuing coverage of snowflakes. Summit 22 live from Vegas. Stick around. We'll be right back with a volonte and our final guest of the day. Mhm, mhm

SUMMARY :

Guys, great to have you all on this little mini panel this morning. But what do you guys do? Model bit is the easiest way for data scientists to deploy machine learning models directly into Snowflake. Give us an overview of hyper affinity. So we helped. Give us the idea of the impetus for it, what it's all about and what these companies And it's really exciting to see how some of the start ups are taking snowflake to So you had 200 over 250 software companies applied We did. So, behind the scenes, we had a sub judging panel, I think it was really fun to have that pressure test where, you know, I can imagine being a 4 to 5 months young start up of snappy with how you position things. Yes, Retail and CPG? I want you to deliver relevant content to me that just explain the whole business. it's so challenging because the brothers brands have to respond to that. You know, the scalability of snowflake means that we can scale the You get kind of that tailwind from snowflakes acceleration. I'm on the phone to my guys saying, Can we use this? bit plus snowflake, the power that delivers to the end user customer? the business needs to know in the back office the score of the lead so that they can do things like routed to the appropriate I want to opt out. And so the idea that And Snowflake is the right partner to help us do it. dragged and pulled the rest of the industry along with it. So that the data scientist is usually taking data out of a of a of a day like something But if we can tell them the data is staying in snowflake and you have that conversation with Snowflake all the time Would you both say that there's credibility like you got street cred, especially being so so are really starting to adopt the cloud now with what they're doing and obviously snowflake really innovating in that area. And I know that the winner is back in India, but tremendous amount of of and really divide, drive, drive real meaningful outcomes for for for our customers in the community. And what was it last year. But the momentum Harry, what is what are some of the things that are next for model bed as and the most exciting thing that happened to me here this week was one of our early design partner. Last question for you is what's next for hyper affinity. So it really helps companies like ours that allow, you know, allow retailers or whoever is to democratise Huge increase from the last time we were all in person. the partners that we have spoke. show since Tuesday and certainly from the two of you as well. Yeah, that's a good thing to look for. We'll be right back with a volonte and our final guest of the day.

SENTIMENT ANALYSIS :

ENTITIES

| Entity | Category | Confidence |

|---|---|---|

| Damon Bryan | PERSON | 0.99+ |

| Stephane Williams | PERSON | 0.99+ |

| Lisa Martin | PERSON | 0.99+ |

| Harry Glaser | PERSON | 0.99+ |

| Harry | PERSON | 0.99+ |

| India | LOCATION | 0.99+ |

| 4 | QUANTITY | 0.99+ |

| Silicon Valley | LOCATION | 0.99+ |

| five minutes | QUANTITY | 0.99+ |

| four | QUANTITY | 0.99+ |

| Modlbit | PERSON | 0.99+ |

| Vegas | LOCATION | 0.99+ |

| Stephane | PERSON | 0.99+ |

| next year | DATE | 0.99+ |

| three years | QUANTITY | 0.99+ |

| five months | QUANTITY | 0.99+ |

| Last year | DATE | 0.99+ |

| Hyper Affinity | ORGANIZATION | 0.99+ |

| last year | DATE | 0.99+ |

| two | QUANTITY | 0.99+ |

| two guys | QUANTITY | 0.99+ |

| yesterday | DATE | 0.99+ |

| five | QUANTITY | 0.99+ |

| Stefan Williams | PERSON | 0.99+ |

| 250 applicants | QUANTITY | 0.99+ |

| 200 | QUANTITY | 0.99+ |

| 20 | QUANTITY | 0.99+ |

| 70 countries | QUANTITY | 0.99+ |

| Las Vegas | LOCATION | 0.99+ |

| Denise Pearson | PERSON | 0.99+ |

| Stefan | PERSON | 0.99+ |

| five minute | QUANTITY | 0.99+ |

| three | QUANTITY | 0.99+ |

| second year | QUANTITY | 0.99+ |

| Snowflake | ORGANIZATION | 0.99+ |

| this year | DATE | 0.99+ |

| today | DATE | 0.99+ |

| Tuesday | DATE | 0.99+ |

| one | QUANTITY | 0.99+ |

| three guests | QUANTITY | 0.98+ |

| 23 years ago | DATE | 0.98+ |

| Damon | PERSON | 0.98+ |

| 50 | QUANTITY | 0.98+ |

| 5 months | QUANTITY | 0.98+ |

| Model Bit | ORGANIZATION | 0.98+ |

| one model | QUANTITY | 0.97+ |

| 40 | QUANTITY | 0.97+ |

| one sentence | QUANTITY | 0.97+ |

| Snow Park | TITLE | 0.97+ |

| Snowflake Damon | ORGANIZATION | 0.97+ |

| this week | DATE | 0.96+ |

| top three | QUANTITY | 0.95+ |

| two production | QUANTITY | 0.95+ |

| both | QUANTITY | 0.94+ |

| 250 incredible applications | QUANTITY | 0.94+ |

| Fourth annual | QUANTITY | 0.94+ |

| Snowflake | EVENT | 0.94+ |

| top 50 | QUANTITY | 0.92+ |

| day one | QUANTITY | 0.92+ |

| Ventures | ORGANIZATION | 0.91+ |

| top 10 | QUANTITY | 0.91+ |

| above | QUANTITY | 0.9+ |

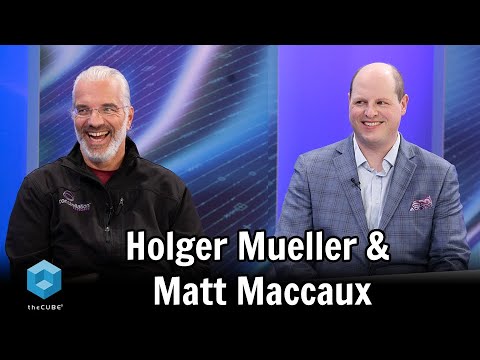

Did HPE GreenLake Just Set a New Bar in the On-Prem Cloud Services Market?

>> Welcome back to The Cube's coverage of HPE's GreenLake announcements. My name is Dave Vellante and you're watching the Cube. I'm here with Holger Mueller, who is an analyst at Constellation Research. And Matt Maccaux is the global field CTO of Ezmeral software at HPE. We're going to talk data. Gents, great to see you. >> Holger: Great to be here. >> So, Holger, what do you see happening in the data market? Obviously data's hot, you know, digital, I call it the force marks to digital. Everybody realizes wow, digital business, that's a data business. We've got to get our data act together. What do you see in the market is the big trends, the big waves? >> We are all young enough or old enough to remember when people were saying data is the new oil, right? Nothing has changed, right? Data is the key ingredient, which matters to enterprise, which they have to store, which they have to enrich, which they have to use for their decision-making. It's the foundation of everything. If you want to go into machine learning or (indistinct) It's growing very fast, right? We have the capability now to look at all the data in enterprise, which weren't able 10 years ago to do that. So data is main center to everything. >> Yeah, it's even more valuable than oil, I think, right? 'Cause with oil, you can only use once. Data, you can, it's kind of polyglot. I can go in different directions and it's amazing, right? >> It's the beauty of digital products, right? They don't get consumed, right? They don't get fired up, right? And no carbon footprint, right? "Oh wait, wait, we have to think about carbon footprint." Different story, right? So to get to the data, you have to spend some energy. >> So it's that simple, right? I mean, it really is. Data is fundamental. It's got to be at the core. And so Matt, what are you guys announcing today, and how does that play into what Holger just said? >> What we're announcing today is that organizations no longer need to make a difficult choice. Prior to today, organizations were thinking if I'm going to do advanced machine learning and really exploit my data, I have to go to the cloud. But all my data's still on premises because of privacy rules, industry rules. And so what we're announcing today, through GreenLake Services, is a cloud services way to deliver that same cloud-based analytical capability. Machine learning, data engineering, through hybrid analytics. It's a unified platform to tie together everything from data engineering to advance data science. And we're also announcing the world's first Kubernetes native object store, that is hybrid cloud enabled. Which means you can keep your data connected across clouds in a data fabric, or Dave, as you say, mesh. >> Okay, can we dig into that a little bit? So, you're essentially saying that, so you're going to have data in both places, right? Public cloud, edge, on-prem, and you're saying, HPE is announcing a capability to connect them, I think you used the term fabric. I'm cool, by the way, with the term fabric, we can, we'll parse that out another time. >> I love for you to discuss textiles. Fabrics vs. mesh. For me, every fabric breaks down to mesh if you put it on a microscope. It's the same thing. >> Oh wow, now that's really, that's too detailed for my brain, right this moment. But, you're saying you can connect all those different estates because data by its very nature is everywhere. You're going to unify that, and what, that can manage that through sort of a single view? >> That's right. So, the management is centralized. We need to be able to know where our data is being provisioned. But again, we don't want organizations to feel like they have to make the trade off. If they want to use cloud surface A in Azure, and cloud surface B in GCP, why not connect them together? Why not allow the data to remain in sync or not, through a distributed fabric? Because we use that term fabric over and over again. But the idea is let the data be where it most naturally makes sense, and exploit it. Monetization is an old tool, but exploit it in a way that works best for your users and applications. >> In sync or not, that's interesting. So it's my choice? >> That's right. Because the back of an automobile could be a teeny tiny, small edge location. It's not always going to be in sync until it connects back up with a training facility. But we still need to be able to manage that. And maybe that data gets persisted to a core data center. Maybe it gets pushed to the cloud, but we still need to know where that data is, where it came from, its lineage, what quality it has, what security we're going to wrap around that, that all should be part of this fabric. >> Okay. So, you've got essentially a governance model, at least maybe you're working toward that, and maybe it's not all baked today, but that's the north star. Is this fabric connect, single management view, governed in a federated fashion? >> Right. And it's available through the most common API's that these applications are already written in. So, everybody today's talking S3. I've got to get all of my data, I need to put it into an object store, it needs to be S3 compatible. So, we are extending this capability to be S3 native. But it's optimized for performance. Today, when you put data in an object store, it's kind of one size fits all. Well, we know for those streaming analytical capabilities, those high performance workloads, it needs to be tuned for that. So, how about I give you a very small object on the very fastest disk in your data center and maybe that cheaper location somewhere else. And so we're giving you that balance as part of the overall management estate. >> Holger, what's your take on this? I mean, Frank Slootman says we'll never, we're not going halfway house. We're never going to do on-prem, we're only in the cloud. So that basically says, okay, he's ignoring a pretty large market by choice. You're not, Matt, you must love those words. But what do you see as the public cloud players, kind of the moves on-prem, particularly in this realm? >> Well, we've seen lots of cloud players who were only cloud coming back towards on-premise, right? We call it the next generation compute platform where I can move data and workloads between on-premise and ideally, multiple clouds, right? Because I don't want to be logged into public cloud vendors. And we see two trends, right? One trend is the traditional hardware supplier of on-premise has not scaled to cloud technology in terms of big data analytics. They just missed the boat for that in the past, this is changing. You guys are a traditional player and changing this, so congratulations. The other thing, is there's been no innovation for the on-premise tech stack, right? The only technology stack to run modern application has been invested for a long time in the cloud. So what we see since two, three years, right? With the first one being Google with Kubernetes, that are good at GKE on-premise, then onto us, right? Bringing their tech stack with compromises to on-premises, right? Acknowledging exactly what we're talking about, the data is everywhere, data is important. Data gravity is there, right? It's just the network's fault, where the networks are too slow, right? If you could just move everything anywhere we want like juggling two balls, then we'd be in different place. But that's the not enough investment for the traditional IT players for that stack, and the modern stack being there. And now every public cloud player has an on-premise offering with different flavors, different capabilities. >> I want to give you guys Dave's story of kind of history and you can kind of course correct, and tell me how this, Matt, maybe fits into what's happened with customers. So, you know, before Hadoop, obviously you had to buy a big Oracle database and you know, you running Unix, and you buy some big storage subsystem if you had any money left over, you know, you maybe, you know, do some actual analytics. But then Hadoop comes in, lowers the cost, and then S3 kneecaps the entire Hadoop market, right? >> I wouldn't say that, I wouldn't agree. Sorry to jump on your history. Because the fascinating thing, what Hadoop brought to the enterprise for the first time, you're absolutely right, affordable, right, to do that. But it's not only about affordability because S3 as the affordability. The big thing is you can store information without knowing how to analyze it, right? So, you mentioned Snowflake, right? Before, it was like an Oracle database. It was Starschema for data warehouse, and so on. You had to make decisions how to store that data because compute capabilities, storage capabilities, were too limited, right? That's what Hadoop blew away. >> I agree, no schema on, right. But then that created data lakes, which create a data swamps, and that whole mess, and then Spark comes in and help clean it out, okay, fine. So, we're cool with that. But the early days of Hadoop, you had, companies would have a Hadoop monolith, they probably had their data catalog in Excel or Google sheets, right? And so now, my question to you, Matt, is there's a lot of customers that are still in that world. What do they do? They got an option to go to the cloud. I'm hearing that you're giving them another option? >> That's right. So we know that data is going to move to the cloud, as I mentioned. So let's keep that data in sync, and governed, and secured, like you expect. But for the data that can't move, let's bring those cloud native services to your data center. And so that's a big part of this announcement is this unified analytics. So that you can continue to run the tools that you want to today while bringing those next generation tools based on Apache Spark, using libraries like Delta Lake so you can go anything from Tableaux through Presto sequel, to advance machine learning in your Jupiter notebooks on-premises where you know your data is secured. And if it happens to sit in existing Hadoop data lake, that's fine too. We don't want our customers to have to make that trade off as they go from one to the other. Let's give you the best of both worlds, or as they say, you can eat your cake and have it too. >> Okay, so. Now let's talk about sort of developers on-prem, right? They've been kind of... If they really wanted to go cloud native, they had to go to the cloud. Do you feel like this changes the game? Do on-prem developers, do they want that capability? Will they lean into that capability? Or will they say no, no, the cloud is cool. What's your take? >> I love developers, right? But it's about who makes the decision, who pays the developers, right? So the CXOs in the enterprises, they need exactly, this is why we call the next-gen computing platform, that you can move your code assets. It's very hard to build software, so it's very valuable to an enterprise. I don't want to have limited to one single location or certain computing infrastructure, right? Luckily, we have Kubernetes to be able to move that, but I want to be able to deploy it on-premise if I have to. I want to deploy it, would be able to deploy in the multiple clouds which are available. And that's the key part. And that makes developers happy too, because the code you write has got to run multiple places. So you can build more code, better code, instead of building the same thing multiple places, because a little compiler change here, a little compiler change there. Nobody wants to do portability testing and rewriting, recertified for certain platforms. >> The head of application development or application architecture and the business are ultimately going to dictate that, number one. Number two, you're saying that developers shouldn't care because it can write once, run anywhere. >> That is the promise, and that's the interesting thing which is available now, 'cause people know, thanks to Kubernetes as a container platform and the abstraction which containers provide, and that makes everybody's life easier. But it goes much more higher than the Head of Apps, right? This is the digital transformation strategy, the next generation application the company has to build as a response to a pandemic, as a pivot, as digital transformation, as digital disruption capability. >> I mean, I see a lot of organizations basically modernizing by building some kind of abstraction to their backend systems, modernizing it through cloud native, and then saying, hey, as you were saying Holger, run it anywhere you want, or connect to those cloud apps, or connect across clouds, connect to other on-prem apps, and eventually out to the edge. Is that what you see? >> It's so much easier said than done though. Organizations have struggled so much with this, especially as we start talking about those data intensive app and workloads. Kubernetes and Hadoop? Up until now, organizations haven't been able to deploy those services. So, what we're offering as part of these GreenLake unified analytics services, a Kubernetes runtime. It's not ours. It's top of branch open source. And open source operators like Apache Spark, bringing in Delta Lake libraries, so that if your developer does want to use cloud native tools to build those next generation advanced analytics applications, but prod is still on-premises, they should just be able to pick that code up, and because we are deploying 100% open-source frameworks, the code should run as is. >> So, it seems like the strategy is to basically build, now that's what GreenLake is, right? It's a cloud. It's like, hey, here's your options, use whatever you want. >> Well, and it's your cloud. That's, what's so important about GreenLake, is it's your cloud, in your data center or co-lo, with your data, your tools, and your code. And again, we know that organizations are going to go to a multi or hybrid cloud location and through our management capabilities, we can reach out if you don't want us to control those, not necessarily, that's okay, but we should at least be able to monitor and audit the data that sits in those other locations, the applications that are running, maybe I register your GKE cluster. I don't manage it, but at least through a central pane of glass, I can tell the Head of Applications, what that person's utilization is across these environments. >> You know, and you said something, Matt, that struck, resonated with me, which is this is not trivial. I mean, not as simple to do. I mean what you see, you see a lot of customers or companies, what they're doing, vendors, they'll wrap their stack in Kubernetes, shove it in the cloud, it's essentially hosted stack, right? And, you're kind of taking a different approach. You're saying, hey, we're essentially building a cloud that's going to connect all these estates. And the key is you're going to have to keep, and you are, I think that's probably part of the reason why we're here, announcing stuff very quickly. A lot of innovation has to come out to satisfy that demand that you're essentially talking about. >> Because we've oversimplified things with containers, right? Because containers don't have what matters for data, and what matters for enterprise, which is persistence, right? I have to be able to turn my systems down, or I don't know when I'm going to use that data, but it has to stay there. And that's not solved in the container world by itself. And that's what's coming now, the heavy lifting is done by people like HPE, to provide that persistence of the data across the different deployment platforms. And then, there's just a need to modernize my on-premise platforms. Right? I can't run on a server which is two, three years old, right? It's no longer safe, it doesn't have trusted identity, all the good stuff that you need these days, right? It cannot be operated remotely, or whatever happens there, where there's two, three years, is long enough for a server to have run their course, right? >> Well you're a software guy, you hate hardware anyway, so just abstract that hardware complexity away from you. >> Hardware is the necessary evil, right? It's like TSA. I want to go somewhere, but I have to go through TSA. >> But that's a key point, let me buy a service, if I need compute, give it to me. And if I don't, I don't want to hear about it, right? And that's kind of the direction that you're headed. >> That's right. >> Holger: That's what you're offering. >> That's right, and specifically the services. So GreenLake's been offering infrastructure, virtual machines, IaaS, as a service. And we want to stop talking about that underlying capability because it's a dial tone now. What organizations and these developers want is the service. Give me a service or a function, like I get in the cloud, but I need to get going today. I need it within my security parameters, access to my data, my tools, so I can get going as quickly as possible. And then beyond that, we're going to give you that cloud billing practices. Because, just because you're deploying a cloud native service, if you're still still being deployed via CapEx, you're not solving a lot of problems. So we also need to have that cloud billing model. >> Great. Well Holger, we'll give you the last word, bring us home. >> It's very interesting to have the cloud qualities of subscription-based pricing maintained by HPE as the cloud vendor from somewhere else. And that gives you that flexibility. And that's very important because data is essential to enterprise processes. And there's three reasons why data doesn't go to the cloud, right? We know that. It's privacy residency requirement, there is no cloud infrastructure in the country. It's performance, because network latency plays a role, right? Especially for critical appraisal. And then there's not invented here, right? Remember Charles Phillips saying how old the CIO is? I know if they're going to go to the cloud or not, right? So, it was not invented here. These are the things which keep data on-premise. You know that load, and HP is coming on with a very interesting offering. >> It's physics, it's laws, it's politics, and sometimes it's cost, right? Sometimes it's too expensive to move and migrate. Guys, thanks so much. Great to see you both. >> Matt: Dave, it's always a pleasure. All right, and thank you for watching the Cubes continuous coverage of HPE's big GreenLake announcements. Keep it right there for more great content. (calm music begins)

SUMMARY :

And Matt Maccaux is the global field CTO I call it the force marks to digital. So data is main center to everything. 'Cause with oil, you can only use once. So to get to the data, you And so Matt, what are you I have to go to the cloud. capability to connect them, It's the same thing. You're going to unify that, and what, We need to be able to know So it's my choice? It's not always going to be in sync but that's the north star. I need to put it into an object store, But what do you see as for that in the past, I want to give you guys Sorry to jump on your history. And so now, my question to you, Matt, And if it happens to sit in they had to go to the cloud. because the code you write has and the business the company has to build as and eventually out to the edge. to pick that code up, So, it seems like the and audit the data that sits to have to keep, and you are, I have to be able to turn my systems down, guy, you hate hardware anyway, I have to go through TSA. And that's kind of the but I need to get going today. the last word, bring us home. I know if they're going to go Great to see you both. the Cubes continuous coverage

SENTIMENT ANALYSIS :

ENTITIES

| Entity | Category | Confidence |

|---|---|---|

| Dave Vellante | PERSON | 0.99+ |

| Frank Slootman | PERSON | 0.99+ |

| Matt | PERSON | 0.99+ |

| Matt Maccaux | PERSON | 0.99+ |

| Holger | PERSON | 0.99+ |

| Dave | PERSON | 0.99+ |

| Holger Mueller | PERSON | 0.99+ |

| two | QUANTITY | 0.99+ |

| 100% | QUANTITY | 0.99+ |

| Charles Phillips | PERSON | 0.99+ |

| Constellation Research | ORGANIZATION | 0.99+ |

| HPE | ORGANIZATION | 0.99+ |

| Excel | TITLE | 0.99+ |

| HP | ORGANIZATION | 0.99+ |

| today | DATE | 0.99+ |

| three years | QUANTITY | 0.99+ |

| GreenLake | ORGANIZATION | 0.99+ |

| three reasons | QUANTITY | 0.99+ |

| Today | DATE | 0.99+ |

| ORGANIZATION | 0.99+ | |

| two balls | QUANTITY | 0.98+ |

| first | QUANTITY | 0.98+ |

| Oracle | ORGANIZATION | 0.98+ |

| 10 years ago | DATE | 0.98+ |

| Ezmeral | ORGANIZATION | 0.98+ |

| both worlds | QUANTITY | 0.98+ |

| first time | QUANTITY | 0.98+ |

| S3 | TITLE | 0.98+ |

| One trend | QUANTITY | 0.98+ |

| GreenLake Services | ORGANIZATION | 0.98+ |

| first one | QUANTITY | 0.98+ |

| Snowflake | TITLE | 0.97+ |

| both places | QUANTITY | 0.97+ |

| Kubernetes | TITLE | 0.97+ |

| once | QUANTITY | 0.96+ |

| both | QUANTITY | 0.96+ |

| two trends | QUANTITY | 0.96+ |

| Delta Lake | TITLE | 0.95+ |

| TITLE | 0.94+ | |

| Hadoop | TITLE | 0.94+ |

| CapEx | ORGANIZATION | 0.93+ |

| Tableaux | TITLE | 0.93+ |

| Azure | TITLE | 0.92+ |

| GKE | ORGANIZATION | 0.92+ |

| Cubes | ORGANIZATION | 0.92+ |

| Unix | TITLE | 0.92+ |

| one single location | QUANTITY | 0.91+ |

| single view | QUANTITY | 0.9+ |

| Spark | TITLE | 0.86+ |

| Apache | ORGANIZATION | 0.85+ |

| pandemic | EVENT | 0.82+ |

| Hadoop | ORGANIZATION | 0.81+ |

| three years old | QUANTITY | 0.8+ |

| single | QUANTITY | 0.8+ |

| Kubernetes | ORGANIZATION | 0.74+ |

| big waves | EVENT | 0.73+ |

| Apache Spark | ORGANIZATION | 0.71+ |

| Number two | QUANTITY | 0.69+ |

Maurizio Davini, University of Pisa and Kaushik Ghosh, Dell Technologies | CUBE Conversation 2021